Introduction:

Lip syncing in animation is one of the most crucial aspects of character animation, helping to bring your creations to life. It’s not just about matching mouth shapes to dialogue, but about conveying emotion and personality through the characters’ speech. In this article, we will delve into the process of how to lip sync animation, from understanding phonemes to using advanced software. By the end, you’ll have a solid understanding of the techniques involved in making your animated characters sound as lively as they look.

What is Lip Syncing in Animation?

Lip syncing refers to the process of synchronizing a character’s mouth movements with spoken dialogue. In animation, this process is vital for ensuring that characters appear to speak in sync with the audio, making them look more realistic and engaging. Animators achieve this by using various techniques, ranging from traditional hand-drawn methods to advanced digital tools. But how do you actually go about achieving this in your animation?

Why is Lip Syncing Important in Animation?

Proper lip syncing is essential because it enhances the emotional impact of a scene. If a character’s lips do not match the words being spoken, it can break the immersion and make the animation seem unprofessional. Good lip sync can also convey the character’s emotional state, adding another layer of storytelling through visual cues.

Understanding the Basics of Lip Syncing

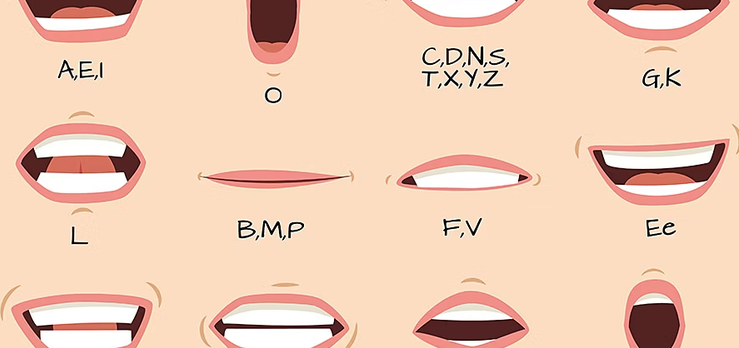

Before diving into the technical aspects of lip syncing, it’s important to understand how the human mouth moves when we speak. There are certain key phonemes and mouth shapes that correspond to sounds in speech. These mouth shapes are called visemes, and they are the foundation of lip syncing in animation.

Key Phonemes and Visemes to Know

Each sound in speech corresponds to a unique mouth shape. Some common phonemes include:

- P, B, M: These are created by pressing the lips together.

- F, V: These sounds involve the top teeth touching the lower lip.

- S, Z: These involve the tongue touching the roof of the mouth.

- A, O, U: These sounds cause wide mouth movements.

Understanding these basic shapes will help you create more accurate mouth movements for your animated characters.

How to Lip Sync Animation: A Step-by-Step Guide

Now that we understand the basics, let’s walk through the steps of lip syncing for animation.

Step 1 – Prepare Your Audio

The first step in lip syncing is to have a clear audio file ready. This can be a recording of the dialogue or voiceover that the character will speak. Having a clean, high-quality recording will make it easier to sync the lip movements with the dialogue.

Step 2 – Break Down the Dialogue into Phonemes

Listen to the audio carefully and break it down into individual phonemes. This can be done manually or with the help of software that can automatically detect phonemes from the audio file. By breaking the dialogue into phonemes, you can focus on matching the right mouth shape to each sound.

Step 3 – Create the Visemes

Now that you know the phonemes in your dialogue, you can create the corresponding visemes for each sound. These are the mouth shapes that your character will use while speaking. Depending on the animation software you’re using, you can either draw each viseme frame by frame or use premade mouth shapes for efficiency.

Step 4 – Timing the Lip Sync

Timing is crucial in lip syncing. It’s not just about getting the mouth shapes right, but making sure they appear at the correct moments. Use keyframes to match the mouth shapes to the corresponding audio cues. Tools like twinching (adjusting the timing of movements) and ease-in/ease-out can help ensure smooth transitions between mouth shapes.

Tools and Software for Lip Syncing

There are several software options available to assist in lip syncing. Some of the most popular tools include:

- Adobe Animate: Offers frame-by-frame animation tools that make it easy to sync mouth shapes with audio.

- Toon Boom Harmony: Known for its powerful lip sync tools, Toon Boom allows automatic lip syncing based on the phonetic breakdown of the audio.

- Dragonframe: A stop-motion animation software that supports detailed mouth movement syncing.

- Papagayo: A free, open-source software that helps break down audio into phonemes and aligns them with character mouth movements.

Each of these tools provides different features and can be useful depending on the style and type of animation you’re working on.

Common Lip Syncing Mistakes to Avoid

Even experienced animators make mistakes when lip syncing. Here are some common errors to avoid:

- Poor timing: If the lip movements are slightly off from the audio, it can disrupt the animation.

- Too many mouth shapes: Using excessive mouth shapes can make the animation look unnatural and jerky.

- Not matching the character’s emotion: The character’s mouth should reflect their mood or tone of voice, not just the words they’re saying.

Tips for Improving Lip Syncing in Animation

- Study real-life references: Watching videos of people speaking can help you better understand how mouth shapes change with different sounds and emotions.

- Practice: The more you practice lip syncing, the more intuitive it becomes.

- Use references: Utilize reference materials such as phoneme charts or lip sync breakdowns to guide you.

Can You Automate Lip Syncing?

While manual lip syncing allows for more control and creativity, automated lip syncing tools can speed up the process, especially in large projects. Programs like Toon Boom and Adobe Animate have automated lip sync features that use phonetic analysis to sync mouth shapes with the audio.

FAQ Section:

1. What is lip sync in animation?

Lip sync in animation refers to the process of matching a character’s mouth movements with spoken dialogue to make the animation more realistic.

2. What software can I use for lip syncing animation?

Popular software for lip syncing includes Adobe Animate, Toon Boom Harmony, Papagayo, and Dragonframe.

3. How can I improve my lip syncing skills?

Practice is key! Study real-life references, use phoneme charts, and experiment with different mouth shapes to improve your timing and accuracy.

4. Can lip syncing be automated in animation?

Yes, many animation software programs offer automated lip syncing tools that analyze audio and generate corresponding mouth shapes.

5. What are the key phonemes for lip syncing?

Some common phonemes include P, B, M, F, V, S, Z, and A, O, U, each corresponding to different mouth shapes.

6. Why is timing important in lip syncing?

Correct timing ensures that mouth shapes appear in sync with the spoken words, making the animation more realistic and immersive.

Conclusion:

Mastering lip syncing in animation is essential for creating characters that feel alive and relatable. By understanding the basics, using the right tools, and paying attention to timing and emotion, you can improve your animation skills and take your work to the next level. Whether you’re a beginner or an experienced animator, perfecting your lip sync technique will enhance your storytelling and bring your animated characters to life.